Pendulum Feedback Control

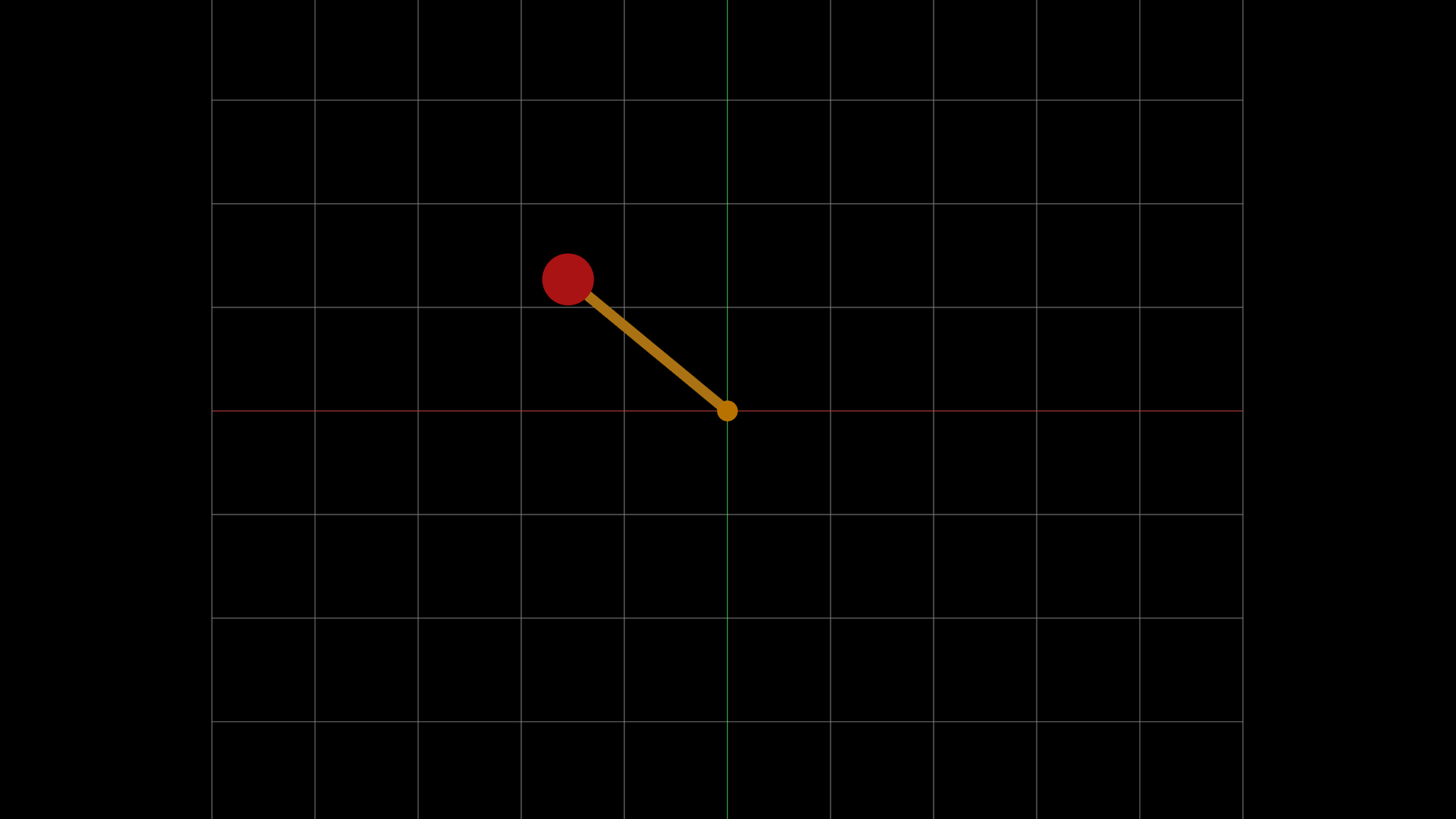

Considering a simple pendulum: a mass m is attached to a massless rigid rod, and is constrained to move along an arc of a circle centered at the pivot point; assuming L is the fixed length of the connecting rod, \theta is the angle it makes with the vertical axis, there is a friction with a coefficient d and an external torque \tau applied at the base.

It is possible to derive the governing equations for the motion of the mass, and an equation which can be solved to determine the period of oscillation.

It would be possible to use generalized Lagrange or Hamilton formulation, but since the system is relatively simple, the equations can be derived from Newton laws directly. The pendulum is constrained to move along an arc, it is possible to write Newton equation for the displacement of the pendulum along the arc with the origin at the bottom and a positive direction to the right.

Three forced applied:

- conservative gravitational force g;

- non conservative friction \mu;

- external torque \tau.

These forces can be specified for the system:

- the gravitation force can be split on two part: a component directed from the pivot to the pendulum which balanced by the tension T in the rod and a component along the arc (-m\,\,g\,\sin(\theta));

- the friction is going along the arc in the opposite direction proportional to the angular velocity (-\mu\, \dot \theta);

- a torque \tau = u that will have to be apply to the pendulum (mLu).

Using Newton’s second law:

\begin{aligned} & \mathbf F = m\,\mathbf a = m\,L \, \ddot \theta\\ & -m \, g\, \sin(\theta) -d\,L\,\dot \theta + m\, L\, u = m \,L\, \ddot \theta \\ &\ddot \theta = -\dfrac{g}{L}\sin(\theta) - \dfrac{d}{m}\dot \theta + u = -\omega^2 \sin(\theta) -\gamma \dot \theta + u \end{aligned}

where \omega = \sqrt{\frac{g}{L}} is the natural frequency of the pendulum and \gamma = \frac{\mu}{m}.

Introducing the state \mathbf x = [\theta \quad \dot \theta]^T, given by the angular position \theta and velocity \dot \theta, this second order differential equation can be rewritten as a system of first order equations:

\begin{aligned} & \dot{\mathbf{x}} = \mathbf{f} (\mathbf{x}) \\ & \dfrac{\mathrm d}{\mathrm dt} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \begin{bmatrix} x_2 \\ -\omega^2 \sin(x_1) -\gamma x_2 - u \end{bmatrix} \end{aligned}

This is a non-linear system, and it is impossible to find an exact solution for it, but it is possible to analyze this system around its points of equilibrium and their respective stability.

System linearization

Given a nonlinear input-output system:

\frac{\mathrm d}{\mathrm dt} \mathbf x = f(\mathbf x, \mathbf u)

it is possible to linearize the dynamics near a fix point (\bar {\mathbf x}, \bar {\mathbf u}) where f(\bar {\mathbf x}, \bar {\mathbf u}) = \mathbf 0 expanding with a Taylor series:

\mathbf{f}(\mathbf{x} + \Delta \mathbf x, \mathbf u + \Delta \mathbf u) = \mathbf{f}(\bar{\mathbf{x}}, \bar{\mathbf{u}}) + \frac{D\mathbf{f}}{D\mathbf{x}} \bigg\vert_{\bar{\mathbf{x}}, \bar{\mathbf u}} \Delta \mathbf{x} + \frac{D\mathbf{f}}{D\mathbf{u}} \bigg\vert_{\bar{\mathbf{x}}, \bar{\mathbf u}} \Delta \mathbf{u} + \cdots

For small displacement around the fix point, the higher order terms are negligibly small. Dropping the \Delta and moving to a system of coordinates where \bar{\mathbf x} and \bar {\mathbf u} are the origin, the linearized system can be written as:

\frac{\mathrm d}{\mathrm dt} \mathbf x = \mathbf {A\,x} + \mathbf {B\,u}

In absence of control (i.e. \mathbf u = \mathbf 0), the dynamical system becomes:

\frac{\mathrm d}{\mathrm dt} \mathbf x = \mathbf {A\,x}

The solution is determined entirely by the eigenvalues and eigenvector of \mathbf A. Computing the Jacobian for the system \mathbf F(\mathbf x, \mathbf u):

\begin{aligned} & \frac{D \mathbf f}{D \mathbf x} = \mathbf A = \begin{bmatrix} 0 & 1 \\ -\omega^2 \cos(x) & -\gamma \end{bmatrix} \\ & \frac{D \mathbf f}{D \mathbf u} = \mathbf B = \begin{bmatrix} 0 \\ 1 \end{bmatrix} \end{aligned}

The equilibrium points of the matrix \mathbf A in absence of controls are (x_1, x_2) = (n \, \pi,0), n \in \mathbb Z, with even n = 0, 2, 4, \dots corresponding to the pendulum in the down position and the odd n = 1, 3, 5, \dots in the up position.

With these information it is possible to linearize around these points. For the down conditions:

\dfrac{\mathrm d}{\mathrm dt} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \begin{bmatrix} 0 & 1 \\ -\omega^2 & -\gamma \end{bmatrix}

The characteristic polynomial is:

\lambda^2 +\gamma\,\lambda + \omega^2 = 0

If there is no dumping (\gamma = 0) then the eigenvalues will be complex and it will be oscillating around \theta =0 with a frequency:

\begin{aligned} & \lambda_{12} = \pm i\,\omega \\ & \omega = \sqrt \frac{g}{L} \end{aligned}

In the general case, the eigenvalues are:

\lambda_{1,2} = \frac{-\gamma \pm i \sqrt{4\omega^2 - \gamma^2} }{2}

Since the real part is negative, the solution will converge oscillating around the equilibrium point.

Considering now the up condition:

\dfrac{\mathrm d}{\mathrm dt} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \begin{bmatrix} 0 & 1 \\ \omega^2 & -\gamma \end{bmatrix}

The characteristic polynomial is:

\lambda^2 +\gamma\,\lambda - \omega^2 = 0

In this case, there is two real eigenvalues:

\lambda_{1,2} = \frac{-\gamma \pm \sqrt{\gamma^2 + 4\omega^2} }{2}

Since one of the eigenvalues is positive (\gamma^2 + 4\omega^2 > \gamma^2), those roots will have an opposite sign, and therefore these are hyperbolic points (or saddles). If there is no damping, the eigenvalues reduces to \pm \omega.

Feedback control

Feedback control is a control strategy where the system’s output is continuously monitored and compared to a desired reference or setpoint. Based on this comparison, corrective actions are taken to minimize the error between the actual output and the target value. This is achieved by adjusting the input to the system in real-time, often using a control law like u = -\mathbf{K\,x}, which modulates the system’s behavior through state feedback.

In the context of the pendulum, open-loop control strategies are inadequate because they do not actively respond to the system’s evolving state. The dynamics of the pendulum, determined by the matrix \mathbf A, are intrinsic to the system and are fully defined by the physical parameters (such as \omega and \gamma). Without feedback, no external control input can modify these dynamics—specifically, the eigenvalues of \mathbf A—meaning the system will either oscillate or become unstable depending on the equilibrium point.

For a pendulum, especially when trying to stabilize it in an upright position (a naturally unstable configuration), feedback control is necessary. Without feedback, the system will naturally drift due to the inherent instability of the upright position, leading to failure in maintaining the pendulum’s position. By introducing feedback, the eigenvalues of the closed-loop system (i.e., \mathbf{A} - \mathbf{B\,K}) can be adjusted to place the system in a stable configuration, ensuring the pendulum remains in the desired position.

No open-loop control strategy can change the dynamics of the system, given the eigenvalues of \mathbf A. However, with full-state control feedback, given by:

u = -\mathbf{K\,x} The closed-loop system becomes:

\frac{\mathrm d}{\mathrm dt} = \mathbf {A\,x} + \mathbf {B\,u} = (\mathbf A - \mathbf{B \, K}) \mathbf x

With an opportune choice of \mathbf K it is possible to change the eigenvalue of the systems in a way they become stable. In the particular case it is possible to get an analytical formula:

\mathbf A - \mathbf{B\, K} = \begin{bmatrix} 0 & 1 \\ \omega^2 - A & -\gamma - B \end{bmatrix}

The characteristic polynomial is:

\lambda^2 +(\gamma + B)\lambda - \omega^2-A = 0

\lambda_{1,2} = \frac{-(\gamma + B) \pm \sqrt{(-\gamma -B)^2 + 4(\omega^2-A)} }{2}

It is the possible to solve for A and B:

\begin{aligned} & \lambda_1 + \lambda_2 = -(\gamma + B) \\ & \lambda_2 - \lambda_1 = \sqrt{(-\gamma - B)^2 + 4(\omega^2-A)}\\ & \left( \lambda_2 - \lambda_1 \right)^2 = \left(\lambda_1 + \lambda_2\right)^2 + 4(\omega^2-A)\\ & -2 \lambda_1\lambda_2 = 2\lambda_1\lambda_2 + 4(\omega^2 -A) \\ & A = \lambda_1\lambda_2 + \omega^2\\ & B = -\gamma -\left(\lambda_1 + \lambda_2 \right) \end{aligned}

As example, for the system with no dumping (\gamma = 0) and unitary frequency (\omega = 1) a control \mathbf K = [4 \quad 4]^T gives as eigenvalues:

\begin{aligned} & \det(\mathbf A - \mathbf K - \lambda \, \mathbf I) = \begin{vmatrix} - \lambda & 1 \\ -3 & -\lambda - 4 \end{vmatrix} = 0 \\ & \lambda^2 + 4\,\lambda + 3 = (\lambda + 1) (\lambda + 3) = 0 \\ & \lambda_1 = -1, \quad \lambda_2 = -3 \end{aligned}

The eigenvalues are negatives and therefore the system is now stable. Starting with these desired eigenvalues [-1 \quad -3]^T, it is possible to compute the control:

\begin{aligned} A & = \lambda_1\lambda_2 + \omega^2 = -1\,-3 + 1 = 4 \\ B & = -\gamma -(\lambda_1 + \lambda_2) = 0 - (-1\,-3) = 4 \end{aligned}

which gives the same results as before.

Neural networks

I implemented a program that, in addition to classical feedback control, use a neural network trained using a genetic algorithm (GA) to optimize the control strategy.

The network takes as inputs the pendulum’s angular position, velocity, and acceleration, normalized by dividing by 2\pi to ensure it is less or equal to 1. The control action predicted by the network is used to compute the control input based on the current state of the pendulum, with the aim of stabilizing it near a desired reference point (e.g., the pendulum’s upright or downward equilibrium position).

// compute the motion for 10 seconds

const size_t simSteps = static_cast<size_t>(T_C(10) / deltaTime);

tp::thread_pool pool;

for (size_t i = 0; i < pSize; ++i)

{

auto floop = [this, &fitness = fitness[i], idx = i, &theta0_, &thetaDot0_, &thetaDDot0_, &simSteps]() {

std::array<T, 2> y1_, y2_;

math::ode::Params<T> params_copy = params;

std::vector<T> inputs(nn::NNParams().inputs), nn_out(2);

T t_ = T_C(0.0), theta_ = theta0_, thetaDot_ = thetaDot0_, thetaDDot_ = thetaDDot0_;

for (size_t j = 0; j < simSteps; ++j)

{

inputs[0] = theta_ / T_C(2.0 * T_PI);

inputs[1] = thetaDot_ / T_C(2.0 * T_PI);

inputs[2] = thetaDDot_ / T_C(2.0 * T_PI);

mNN->feedforward(inputs, nn_out, idx, false);

params_copy.u = -KGainNN * (nn_out[0] * (theta_ - control::Params<T>().y_ref[0]) +

nn_out[1] * (thetaDot_ - control::Params<T>().y_ref[1]));

y1_ = {theta_, thetaDot_};

ma::rk4singlestep(ge::motionFun, deltaTime, t_, y1_, y2_, params_copy);

theta_ = y2_[0];

thetaDot_ = y2_[1];

// run the function again to get the correct thetaDDot y2_ is now the good input, y1_ is the output

ge::motionFun(time, y2_, y1_, params_copy);

thetaDDot_ = y1_[1];

fitness += std::abs(theta_ - control::Params<T>().y_ref[0]);

fitness += std::abs(thetaDot_ - control::Params<T>().y_ref[1]);

t_ += deltaTime;

}

fitness /= T_C(simSteps);

};

}

At each iteration, the pendulum’s dynamics are simulated over a fixed time horizon (10 seconds), using a numerical integration method (Runge-Kutta 4th order). The GA evolves a population of neural networks, with each individual in the population evaluated based on a fitness function. The fitness function calculates the total error between the pendulum’s current state (angular position and velocity) and the desired reference state over the simulation period. Specifically, for each time step, the absolute errors between the predicted position and velocity and their respective reference values are accumulated, and the average error is computed at the end of the simulation.

// sort in ascending order and store the index

std::iota(idx.begin(), idx.end(), 0);

std::sort(idx.begin(), idx.end(), [&fitness](size_t i1, size_t i2) { return fitness[i1] < fitness[i2]; });

mNN->UpdateWeightsAndBiases(idx);

mNN->CreatePopulation();

bestFitness = fitness[idx[0]];

if (flags & pflags::VERBOSE)

{

for (size_t i = 0; i < nn::NNParams().top_individuals; ++i)

LOGGER(logging::INFO) << std::string("Top " + num_to_string(i) + " : " + num_to_string(idx[i]) +

" fitness : " + num_to_string(fitness[idx[i]]));

std::vector<T> nn_out(2);

ComputeControlNN(nn_out);

// print the best performer fitness

LOGGER(logging::INFO) << std::string(

"Best performer fitness : " + num_to_string(fitness[idx[0]]) + num_to_string(bestFitness) + " K: [" +

num_to_string(K[0][0]) + " " + num_to_string(K[1][0]) + "]" + " K_NN: [" +

num_to_string(KGainNN * nn_out[0]) + " " + num_to_string(KGainNN * nn_out[1]) + "]");

}

nGenerations++;

mNN->UpdateEpochs();

The genetic algorithm sorts the neural networks based on their fitness scores, selects the top-performing individuals, and applies crossover and mutation to generate a new population. This process continues for multiple generations, with the best-performing network from each generation used to update the control strategy. The control gain K_{\text{NN}} is adjusted using the network’s output to influence the pendulum’s dynamics. Over successive generations, the system converges toward an optimal control policy, with the fitness function driving the minimization of the pendulum’s deviation from the desired reference.

The best fitness is tracked and used to monitor the system’s performance, allowing for real-time adjustments to control parameters as the training progresses.

Complete code

The complete code is available on GitHub. The repository contains detailed instructions on how to set up the environment, compile, run the training and the tests and it is available at the following link.