Understanding the attention mechanism in transformer networks

The attention mechanism is the central innovation of the Transformer architecture, reshaping how models handle sequential data in natural language processing. Unlike traditional models that process sequences sequentially, the attention mechanism allows the model to consider all positions in the input simultaneously. This means that each word in a sentence can directly relate to every other word, regardless of their position, enabling the model to capture dependencies over long distances more effectively.

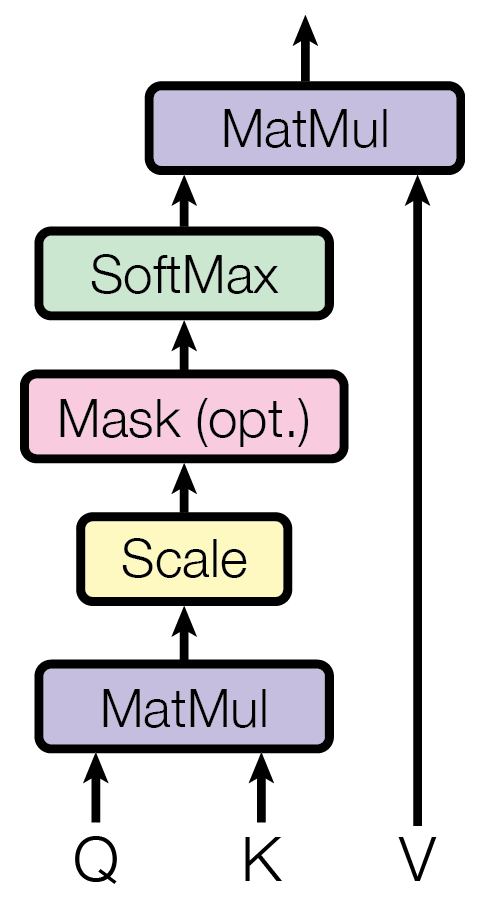

At its core, the attention mechanism operates by computing a set of attention weights that determine the relevance of other words in the sequence to a particular word. For each word in the input, the model generates three vectors through linear transformations: the query, the key, and the value. These vectors are derived from the word embeddings and are essential for calculating how much attention to pay to other words.

The process involves taking the query vector of a word and computing dot products with the key vectors of all words in the sequence. These dot products measure the compatibility or similarity between the query and the keys. The results are then scaled and passed through a softmax function to obtain normalized attention weights, which reflect the importance of each word in the context of the current word.

These attention weights are used to create a weighted sum of the value vectors, producing a new representation for each word that incorporates information from the entire sequence. This allows the model to focus on relevant parts of the input when processing each word, effectively capturing context and relationships between words.

Multi-head attention

An extension of this concept is the multi-head attention mechanism, which runs several attention mechanisms, or “heads,” in parallel. Each head operates in a different subspace of the embedding, allowing the model to capture various types of relationships and patterns simultaneously. The outputs of all the heads are then concatenated and projected to form the final representation.

This approach has enabled the development of language models that can understand context more effectively and handle complex language tasks. It has led to significant improvements in machine translation, text summarization, and question-answering systems. The attention mechanism’s efficiency and effectiveness are key reasons behind the success of large language models, as it allows them to process and learn from vast amounts of data, capturing intricate patterns in human language.

For more insights into this topic, you can find the details here.